Deploying a Platform for Greater Knowledge and Efficiency

It’s clear that the technology to support strategic management is available today, but the challenge is assembling it in a way that delivers the most value for the investment.

There is no shortage of vendors in the market offering their particular take via solutions, architectures, or platforms. Frameworks and architectures assume that what is being purchased is primarily services to build a solution. Platforms assume a solution that is “ready for use,” requiring fewer services to implement and maintain.

Regardless of the label, utility decision makers should be able to quickly access and analyze quality data. The ideal approach is a platform that can:

- Support streaming data from equipment and sensors. The asset base of utilities will remain heterogeneous with equipment, control systems, and communication protocols from multiple manufacturers deployed as the result of replacement cycles, vendor preferences, legacy processes, and mergers.

- Quickly access multiple types and sources of data. Data ingested may come from internal sources (e.g. equipment sensors, IoT devices, historians, control systems, business applications — EAM, ERP, GIS) and/or external sources (manufacturer specifications, exploded view diagrams, localized weather data,.). It may be both structured and unstructured, time series and transactional. This will require a utility data model built on industry-recognized standards such as the Common Information Model (CIM) with an understanding that standards are a “work in progress”.

- Deliver quality data. One of the chief complaints of utility decision makers is the quality of data in existing applications. There are automated ways to ensure data quality through the use of analytics in the data processing stage.

- Analyze the data in context. Analytics on individual assets is the first level, but a next-generation approach involves viewing the asset in context. Whether dealing with a plant or a pipeline, a utility needs to understand what equipment is critical to the process or what connected asset is upstream or downstream from the asset in question. While a compressor may not be considered critical to operations, it may supply a piece of equipment that is critical to the process. From a power perspective, analytics enable whole-network reliability maintenance and network-driven criticality and consequence analysis. A utility should be able to manage any asset (unlike some that focus on only high-value assets) and be able to model the interconnection of assets.

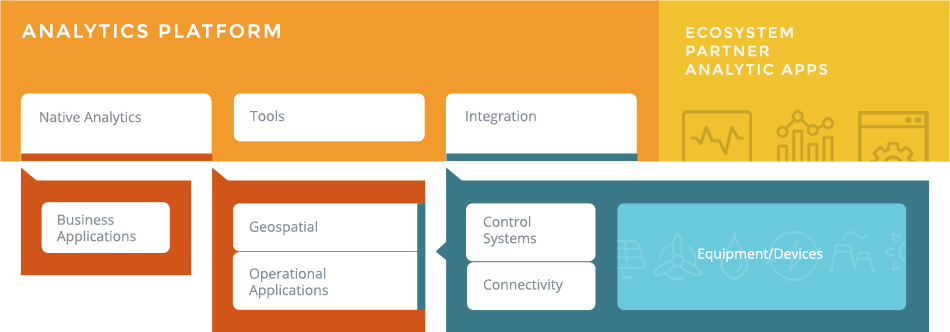

- Accommodate multiple analytics. Rather than be limited to a prescribed set of analytics, the platform should be able to accommodate multiple analytical tools and applications as described previously. The particular methodology will likely vary based on the questions to be answered. For example, Monte Carlo simulation might be appropriate in some circumstances, while linear programming would be useful in others, or a combination of these two methodologies, plus other analytics.

- Provide an open system for development. The most creative and innovative technology is open source. It is being conceived and hatched by individuals or groups of developers. Regardless of whether companies want to deploy open source technology, they will want to implement an open system that allows applications to be built to answer questions beyond asset management as the need arises. This enables utilities to implement in-house models with their team of data scientists or to work with partners of choice while retaining ownership of their intellectual property.

Data source, store

Data source, real time